I work as a Technical Expert at Zenseact (a Volvo Cars owned self-driving software company, previously named Zenuity) in Gothenburg, Sweden, specializing in Localization and Road Estimation for autonomous vehicles. Previously, I worked 4-year as a Computer Vision Researcher at Nokia Research in Finland and pursued a PhD in Computer Vision at Tampere University in Finland.

Research Interests: 3D Computer Vision, Localization, Autonomous Driving, Deep Learning

Hobbies: Piano, Karate, Trail-running, Swimming

Previous Projects (before 2018)

Vehicle Detection

Vehicle Detection

Created vehicle detection pipeline with two approaches, deep neural networks (YOLO+TensorFlow) and support vector machines (HOG+OpenCV). Optimized and evaluated the model on video data from both highway and city driving.

Autonomous driving with Model Predictive control

Autonomous driving with Model Predictive control

This project uses a Model Predictive Control (MPC) to drive a car in a game simulator. The server provides reference waypoints (yellow line in the demo video) via websocket, and we use MPC to simulate the vehicle trajactory (green line), compute steering and throttle commands to drive the car. The solution must be robust to 100ms latency, since it might encounter in real-world application.

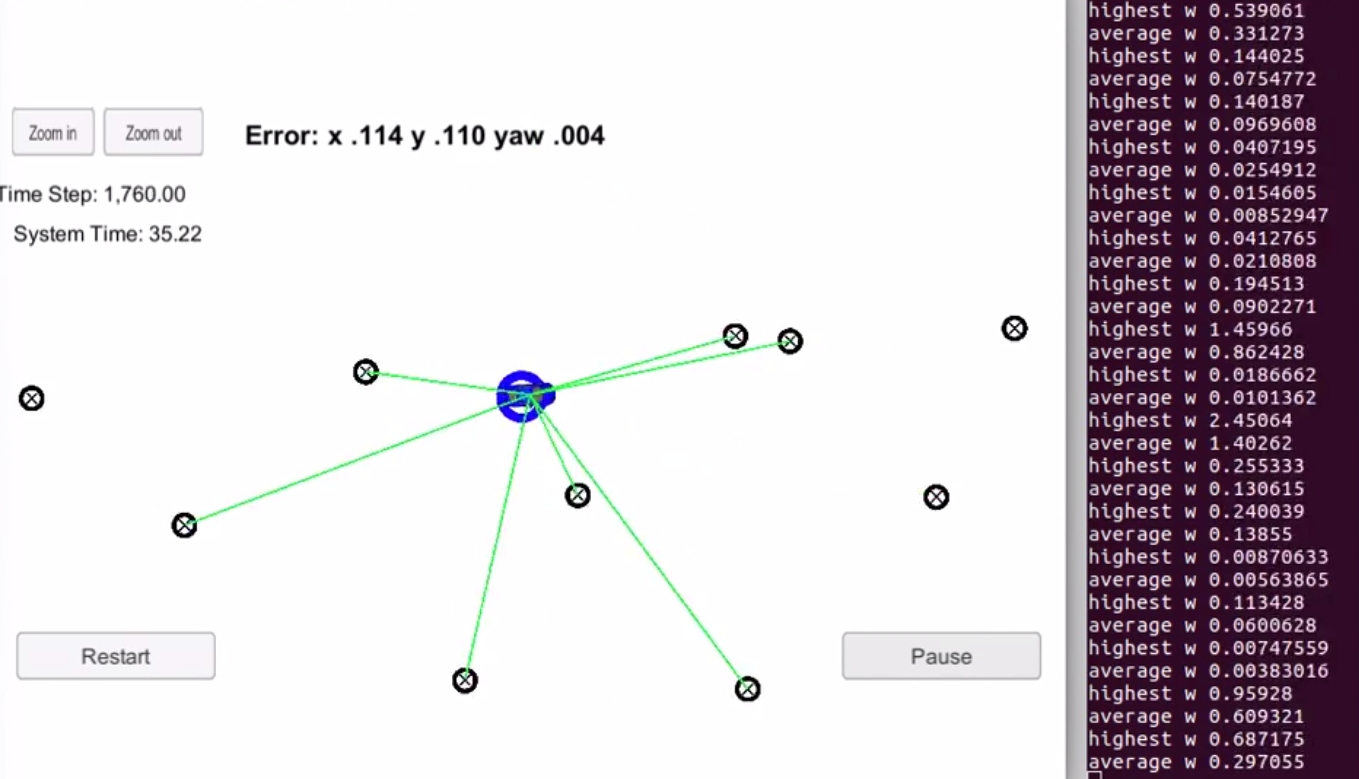

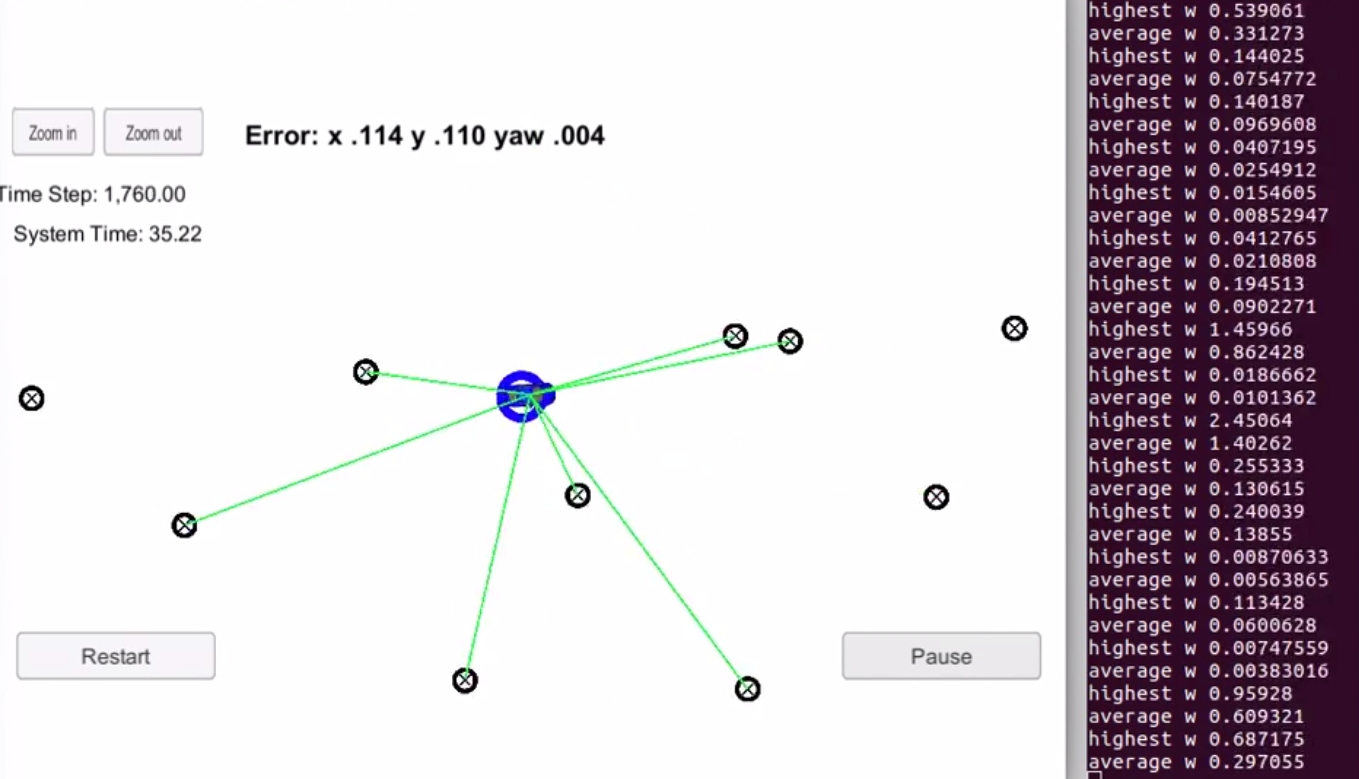

Vehicle Localization with a Particle Filter

Vehicle Localization with a Particle Filter

Vechile is transported to an unkonw place, then a real-time particle filter is used for estimating the vechicle's position and orientation with given a map, an initial noisy GPS, and each timestep's noisy observation data.

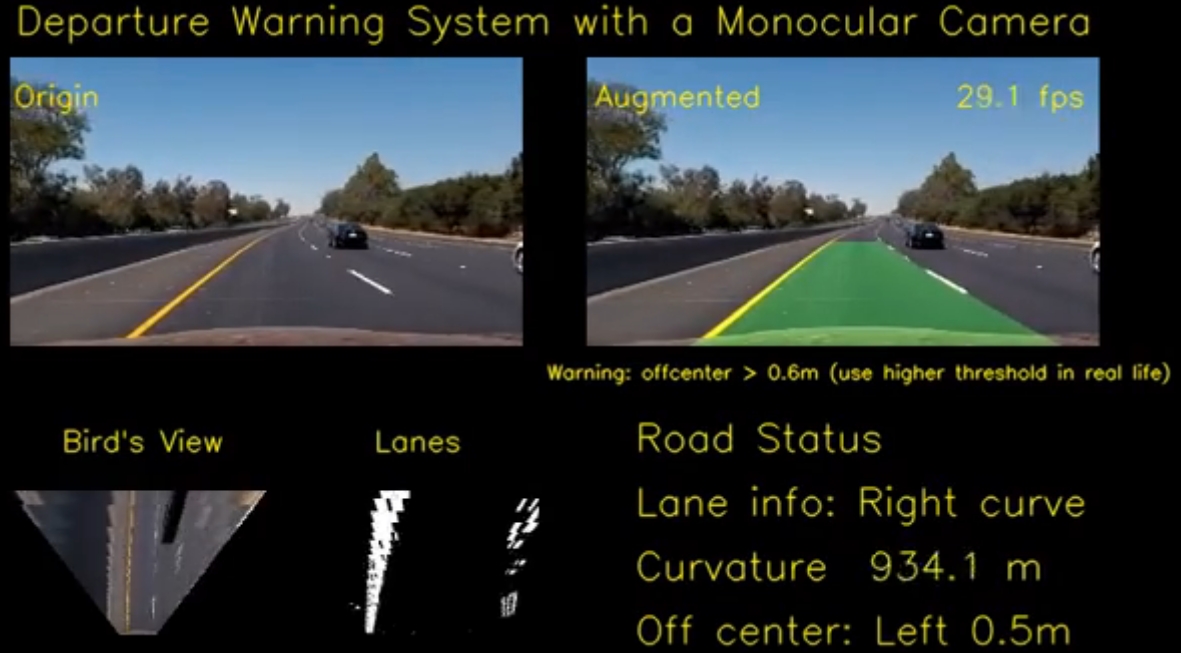

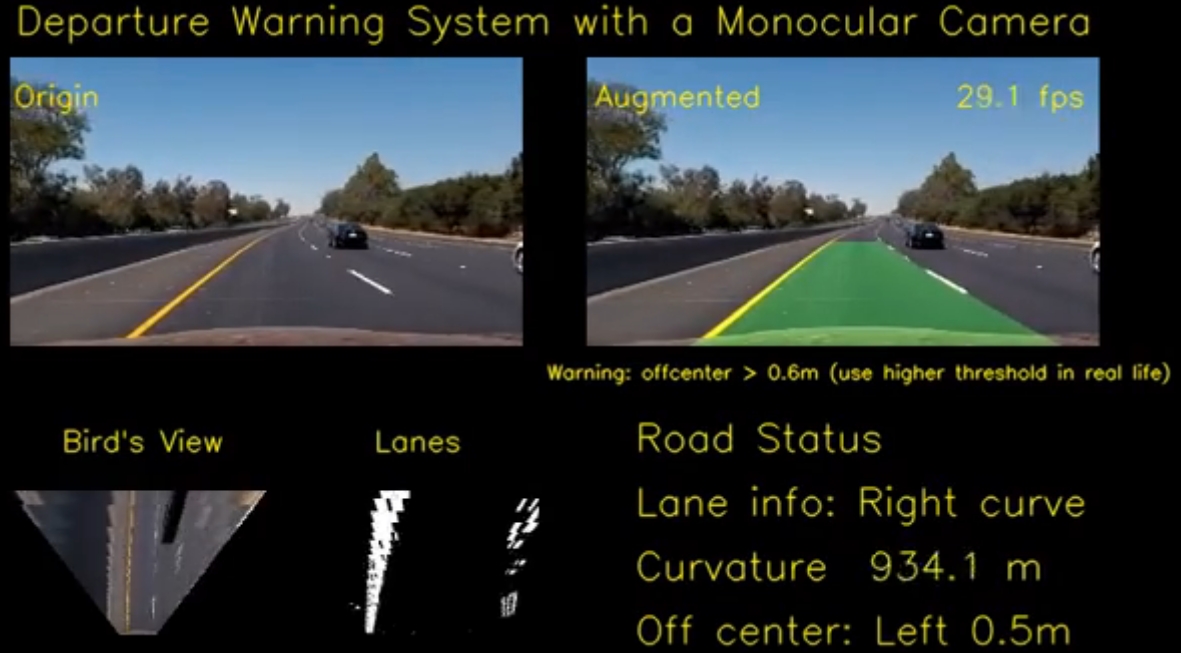

Lane Depature Warning

Lane Depature Warning

Designed and implemented a lane-finding algorithm and a lane-departure-warning system. Identified lane curvature and overcame environmental challenges, e.g. shadows, pavement changes.

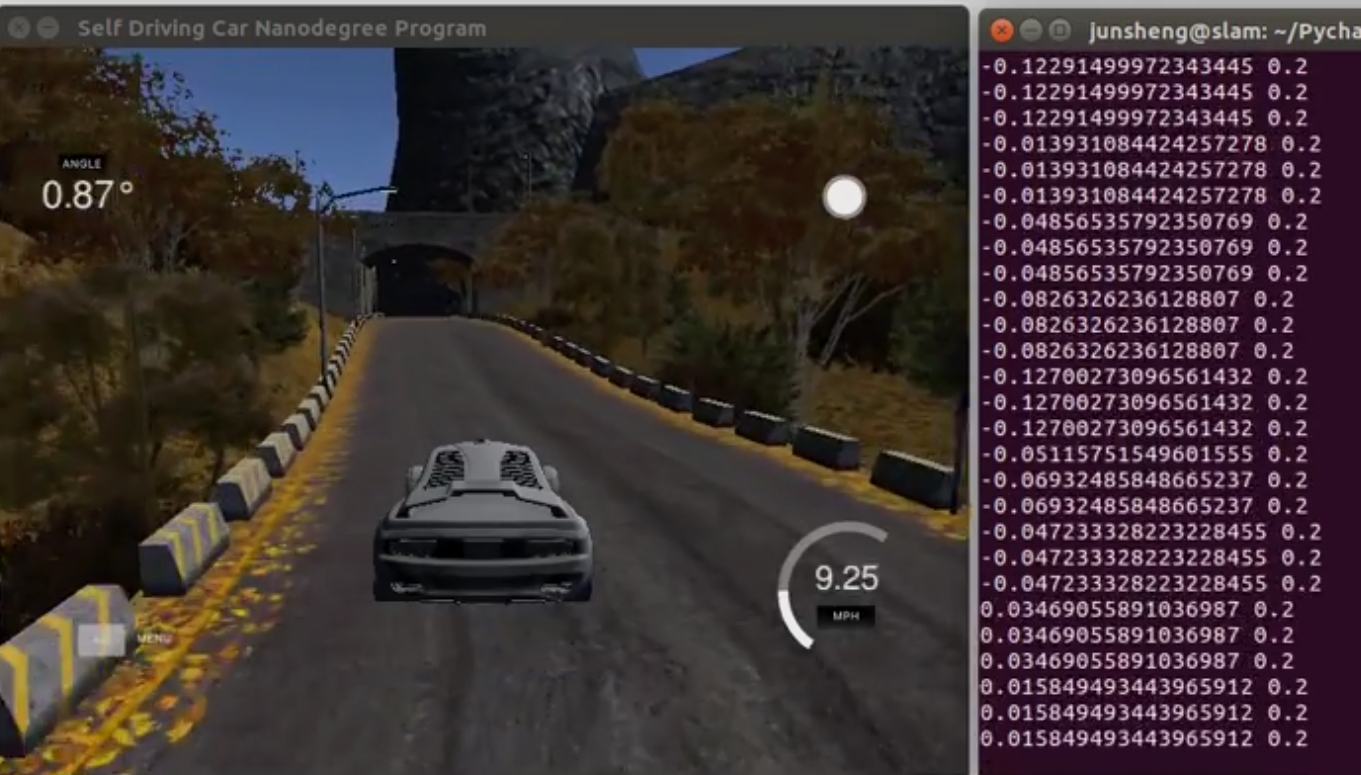

Driving behavioral cloning

Driving behavioral cloning

Built and trained a CNN to autonomously steer a car in a game simulator, using TensorFlow and Keras. Used optimization techniques such as regularization and dropout to generalize the network for driving on unseen tracks.

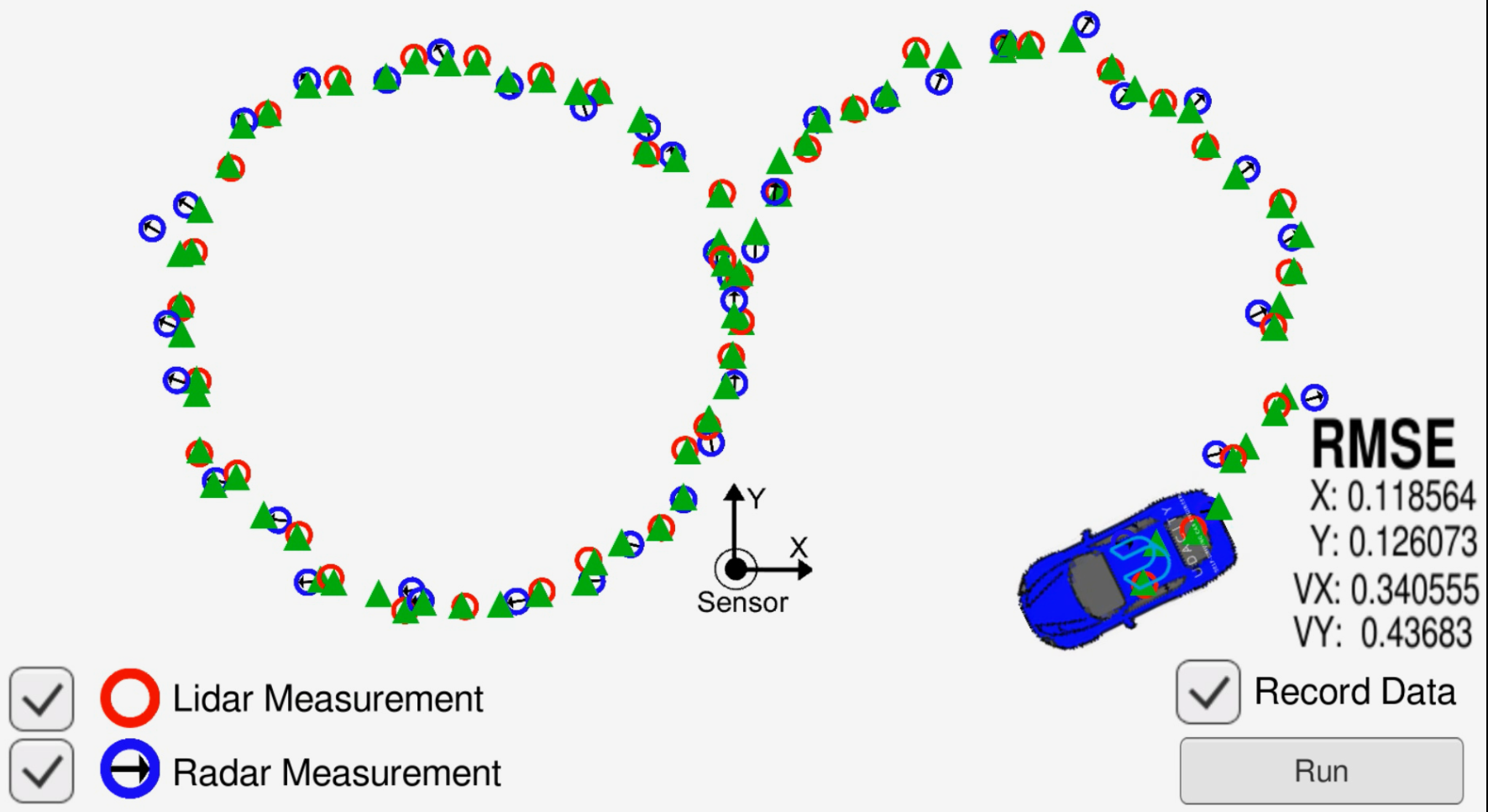

Object tracking with Sensor Fusion-based Extended Kalman Filter

Object tracking with Sensor Fusion-based Extended Kalman Filter

Utilize sensor data from both LIDAR and RADAR measurements for object (e.g. pedestrian, vehicles, or other moving objects) tracking with the Extended Kalman Filter.

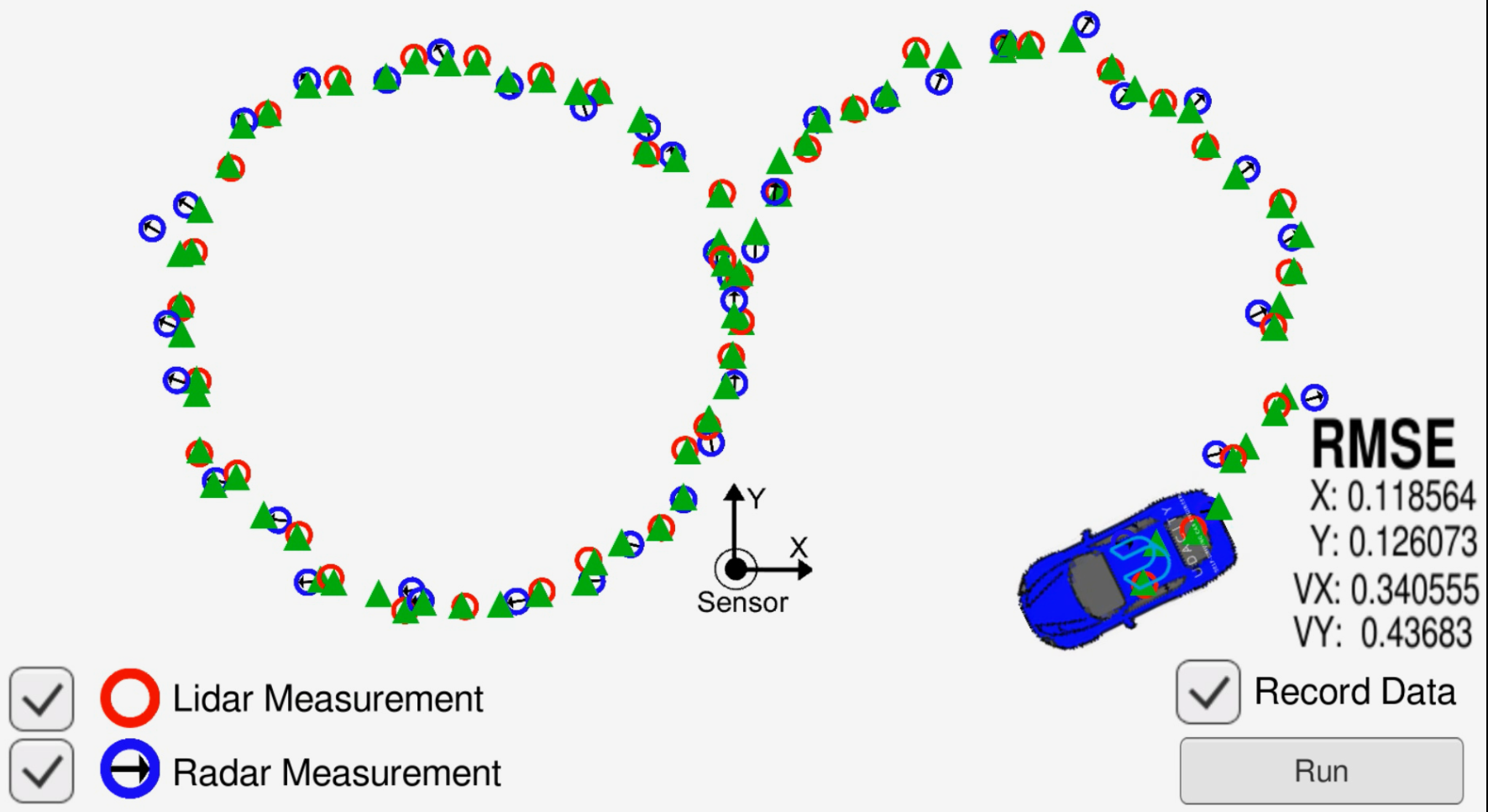

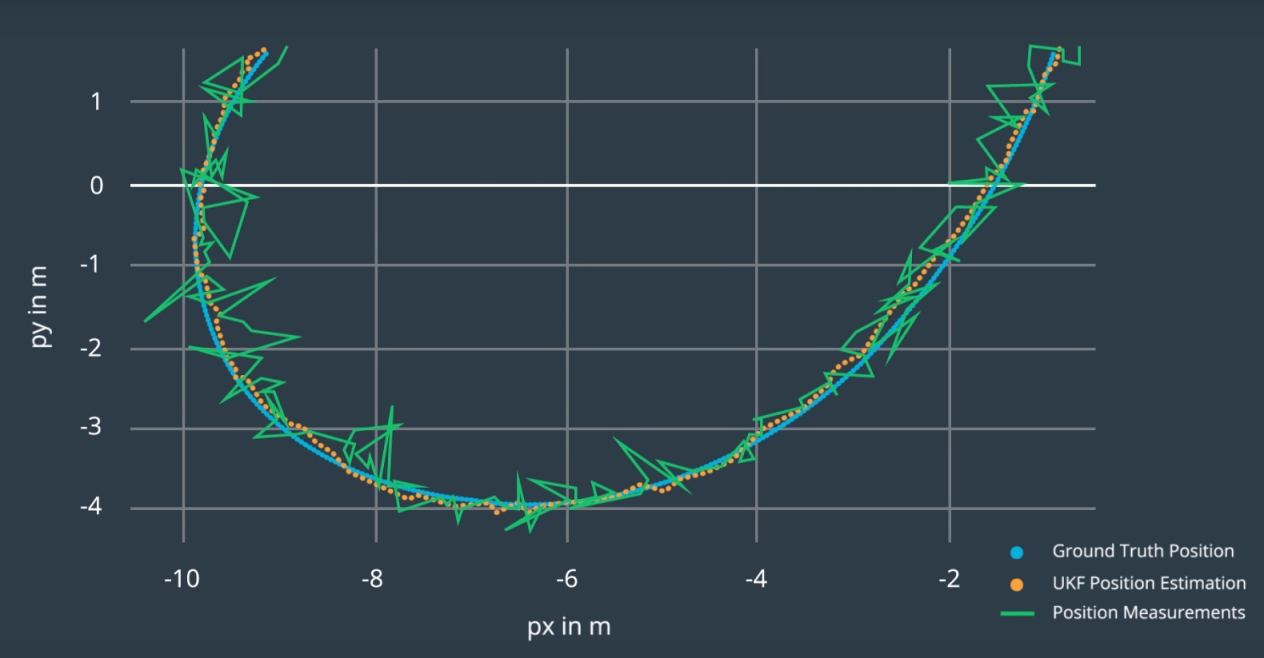

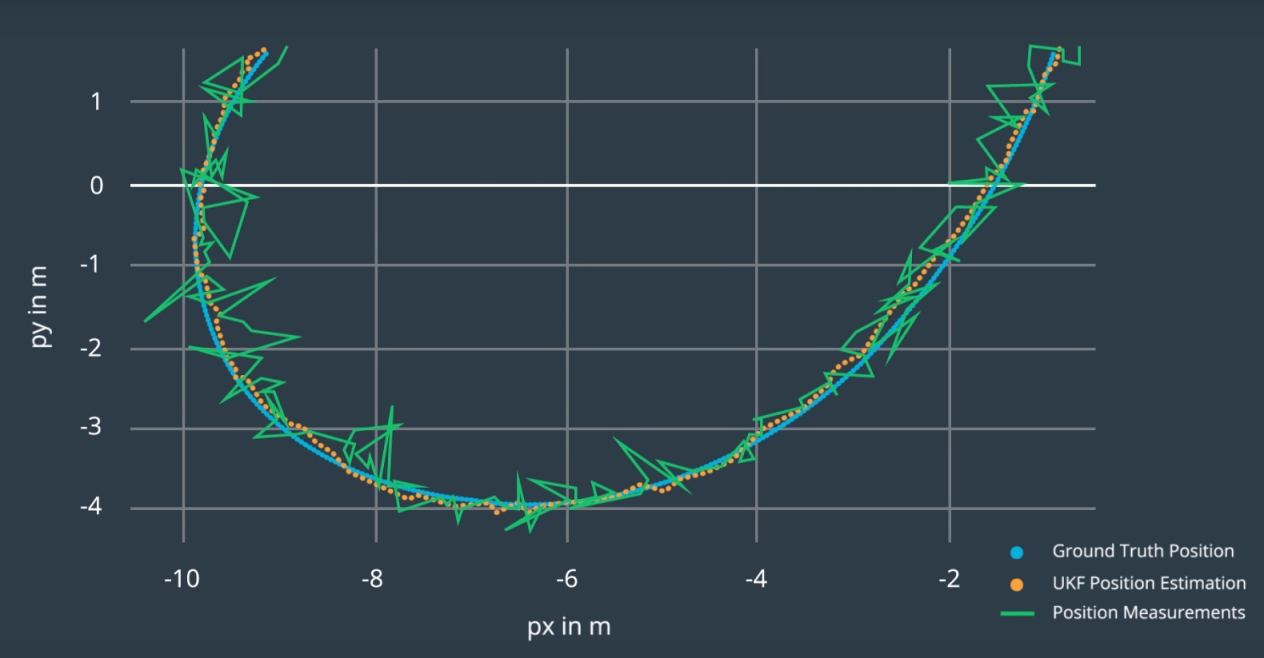

Object Tracking with Sensor Fusion-based Unscented Kalman Filter

Object Tracking with Sensor Fusion-based Unscented Kalman Filter

Utilize sensor data from both LIDAR and RADAR measurements for object (e.g. pedestrian, vehicles, or other moving objects) tracking with the Unscented Kalman Filter.

Find Lane Lines on the road

Find Lane Lines on the road

Detected highway lane lines on a video stream. Used OpencV image analysis techniques to identify lines, including Hough Transforms and Canny edge detection.

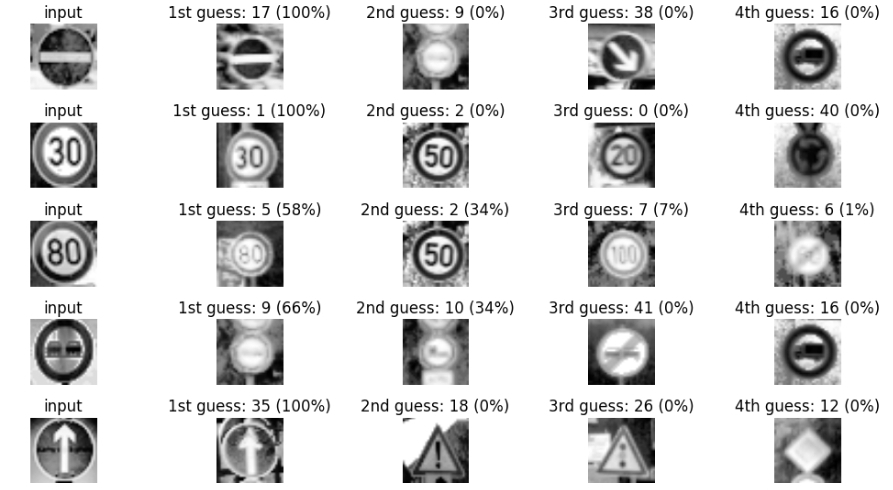

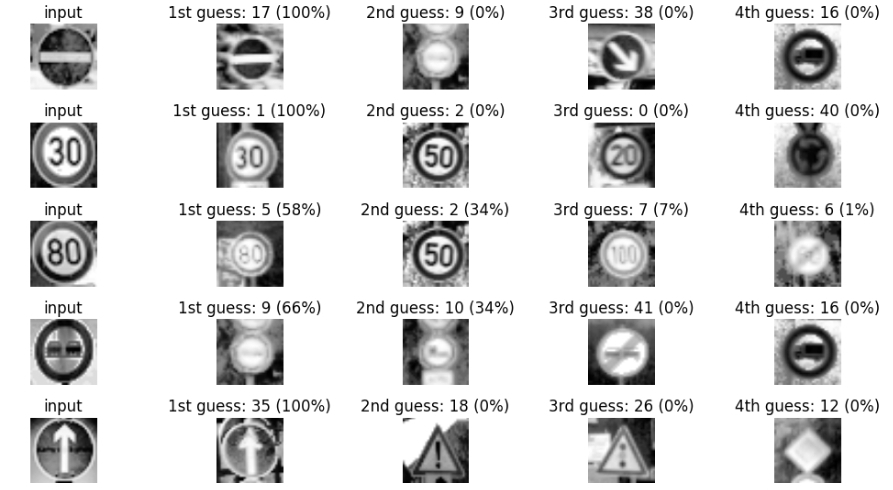

Traffic Sign Recognition

Traffic Sign Recognition

Built and trained a deep neural network to classify traffic signs, using TensorFlow. Experimented with different network architectures. Performed image pre-processing and validation to guard against overfitting.

Road Segmentation

Road Segmentation

In the case of the autonomous driving, given an front camera view, the car needs to know where is the road. In this project, we trained a neural network to label the pixels of a road in images, by using a method named Fully Convolutional Network (FCN). In this project, FCN-VGG16 is implemented and trained with KITTI dataset for road segmentation.

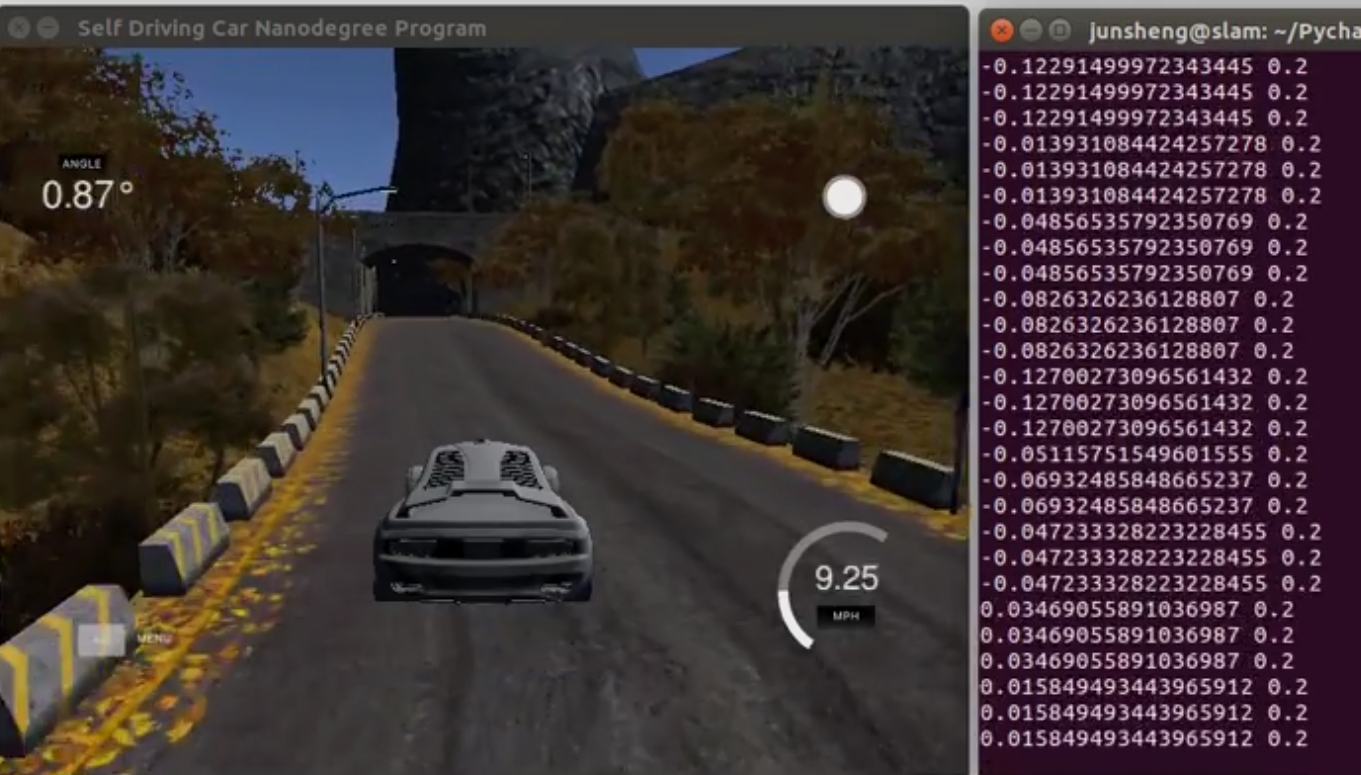

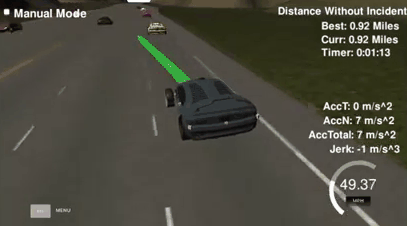

Autonomous driving with PID controller

Autonomous driving with PID controller

This project is to use PID controllers to control the steering angle and the throttle for driving a car in a game simulator. The simulator provides cross-track error (CTE) via websocket. The PID (proportional-integral-differential) controllers give steering and throttle commands to drive the car reliably around the simulator track.

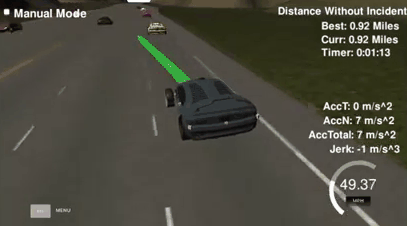

Path Planner

Path Planner

Implement a simple real-time path planner in C++ to navigate a car around a simulated highway scenario, including other traffic, given waypoint, and sensor fusion data. The planned path should be safe and smooth, so that tha car avoids collisions with other vehicles, keeps within a lane (aside from short periods of time while changing lanes), and drive according to the speed limits.

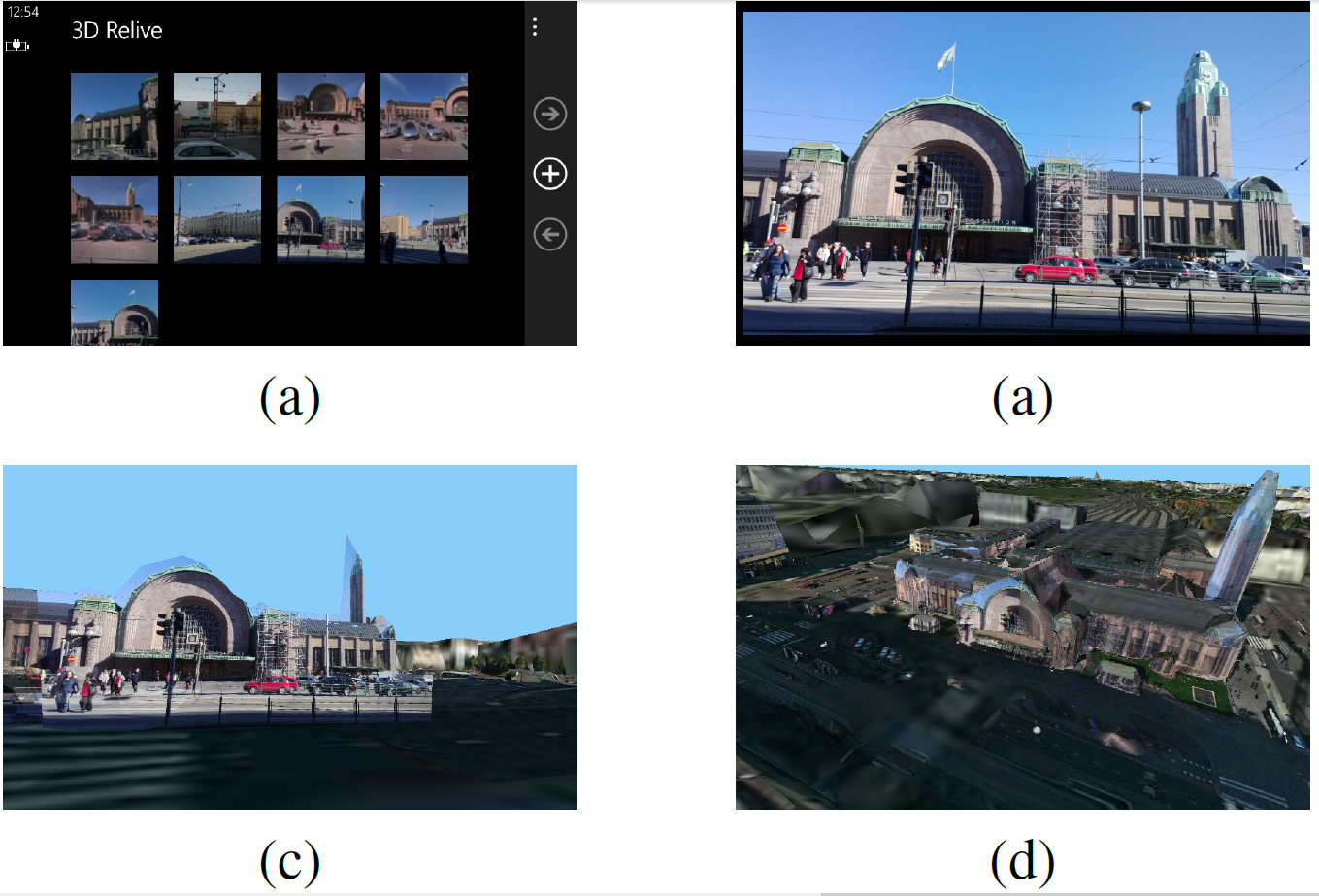

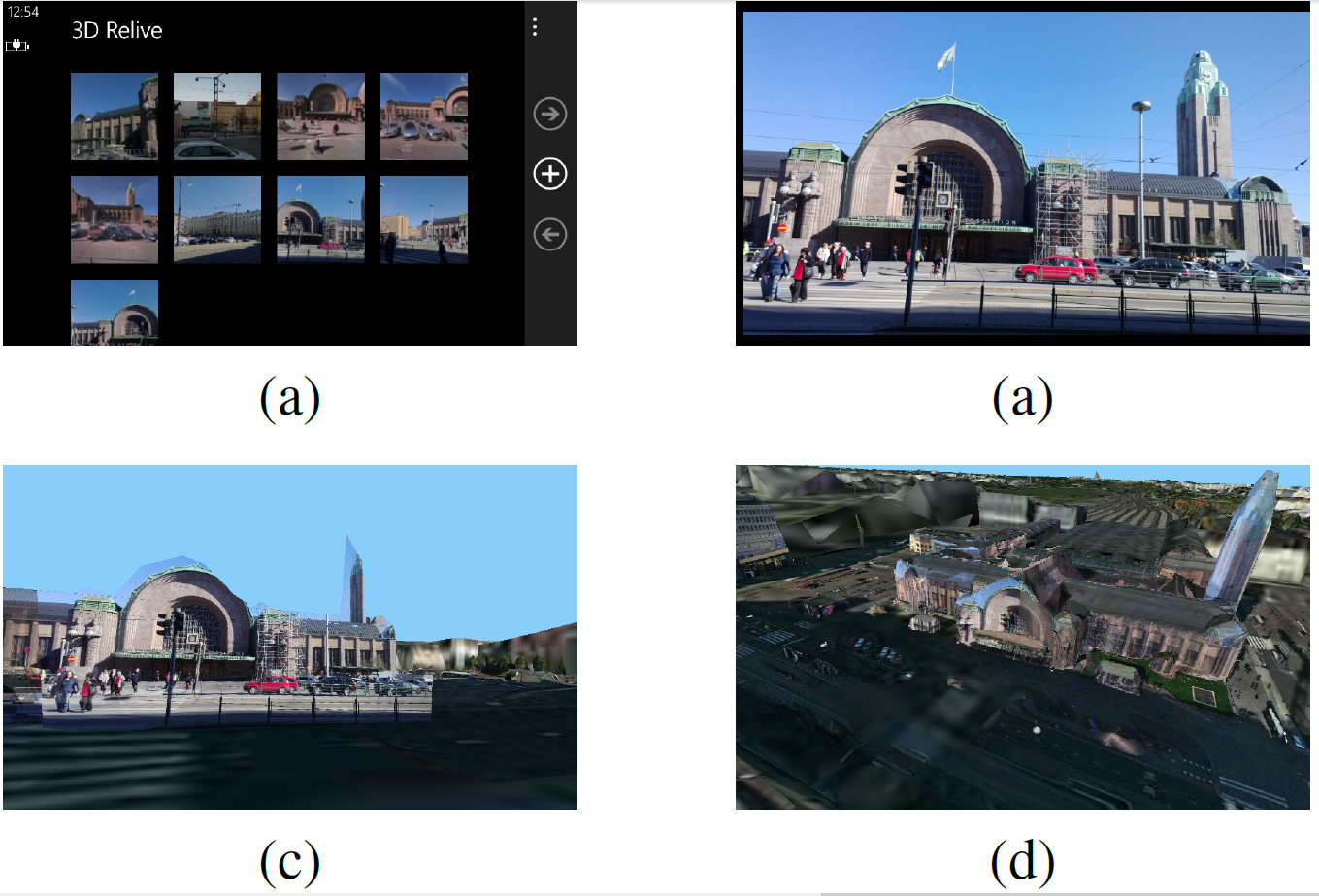

A 3D map augmented photo gallery application on moible device

A 3D map augmented photo gallery application on moible device

We proposes a 3D map augmented photo gallery mobile application that allows user to virtually transit from 2Dimage space to the 3D map space, to expand the field of viewto surrounding environments that are not visible in the original image, and to change viewing angles among different global registered images during the image browsing.

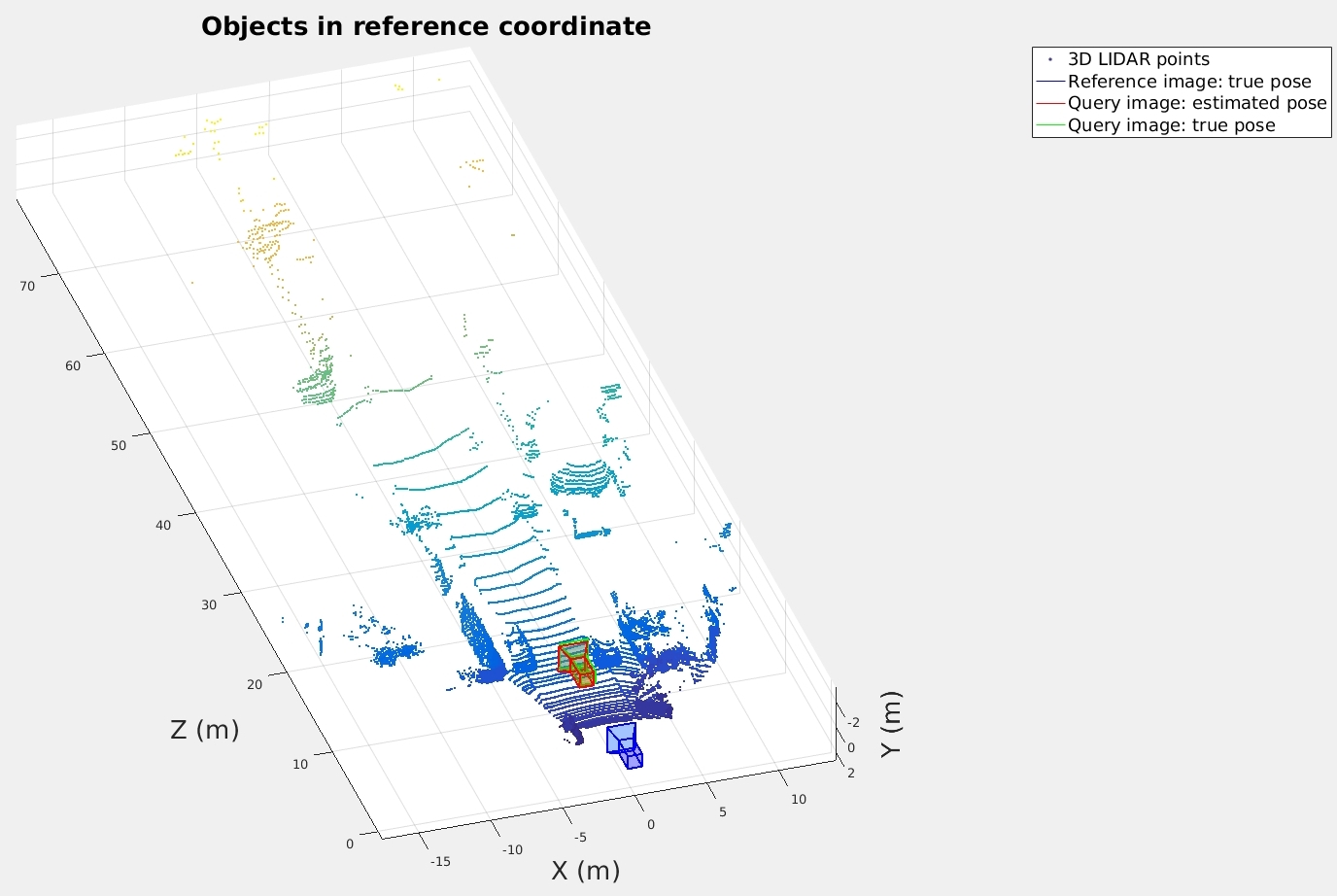

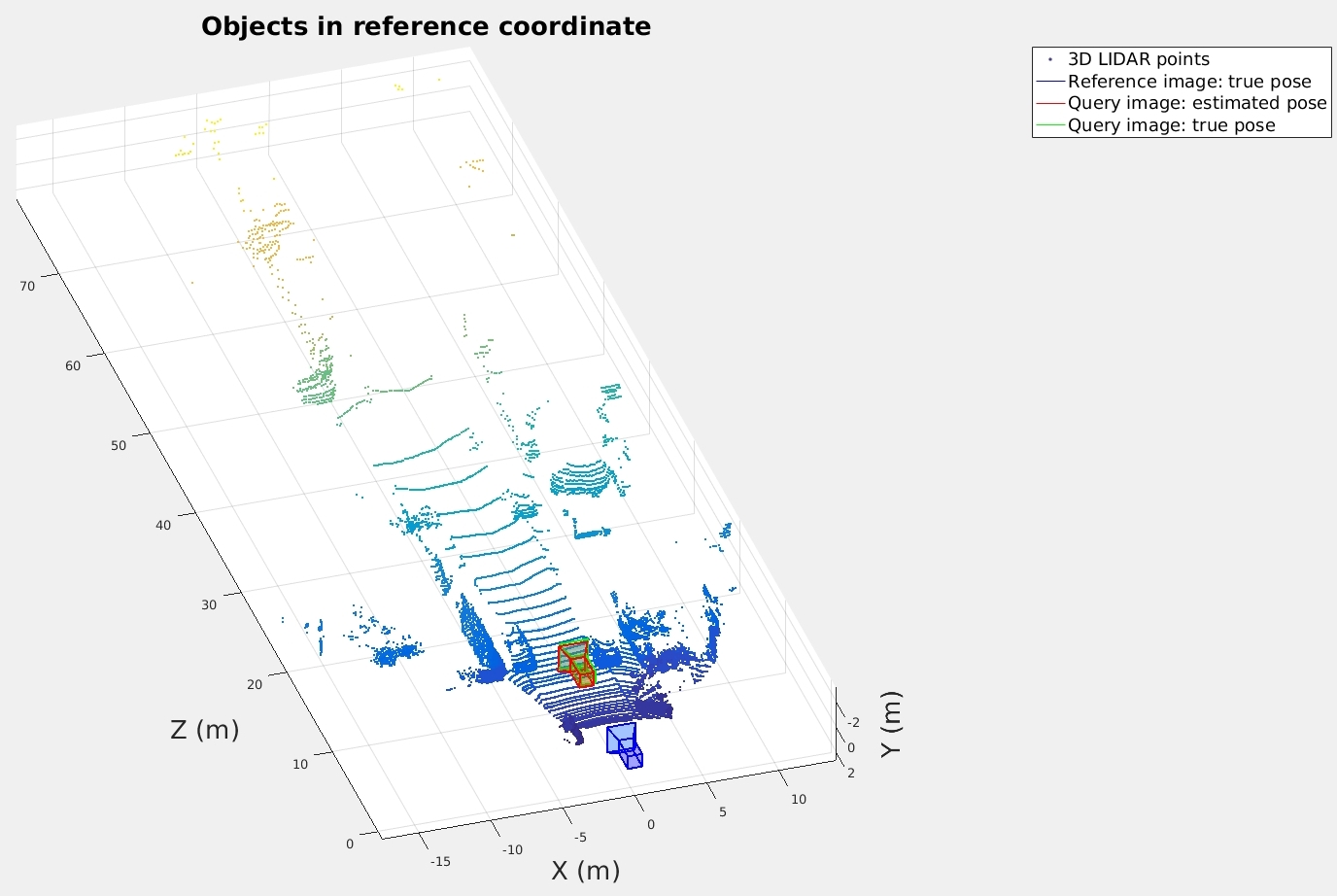

Camera Pose Estimation

Camera Pose Estimation

Given a map contians street-view image and lidar, estimate the 6 DoF camera pose of a query image. Input of the system: query image, reference image and lidar point cloud, where reference image and lidar are known in a global coordinate system. Output of the system: 6 DoF camera pose of the query image in the global coordinate system.

Augmented and interactive video playback based on global camera pose

Augmented and interactive video playback based on global camera pose

We propose a video playback system that allows user to expend the field of view to surrounding environments that are not visible in the original video frame, arbitrarily change the viewing angles, and see the superimposed point-of-interest (POIs) data in an augmented reality manner during the video playback.

Vehicle Detection

Vehicle Detection

Vehicle Detection

Vehicle Detection

Autonomous driving with Model Predictive control

Autonomous driving with Model Predictive control

Vehicle Localization with a Particle Filter

Vehicle Localization with a Particle Filter

Lane Depature Warning

Lane Depature Warning

Driving behavioral cloning

Driving behavioral cloning

Object tracking with Sensor Fusion-based Extended Kalman Filter

Object tracking with Sensor Fusion-based Extended Kalman Filter

Object Tracking with Sensor Fusion-based Unscented Kalman Filter

Object Tracking with Sensor Fusion-based Unscented Kalman Filter

Find Lane Lines on the road

Find Lane Lines on the road

Traffic Sign Recognition

Traffic Sign Recognition

Road Segmentation

Road Segmentation

Autonomous driving with PID controller

Autonomous driving with PID controller

Path Planner

Path Planner

A 3D map augmented photo gallery application on moible device

A 3D map augmented photo gallery application on moible device

Camera Pose Estimation

Camera Pose Estimation

Augmented and interactive video playback based on global camera pose

Augmented and interactive video playback based on global camera pose